Last Update: 2024 - 05 - 21

Query Performance Tuning – Basic Universal Rules

by Philipp Stiefel, originally published 2020-12-27

last revision on 2020-12-22

Performance tuning of Microsoft Access queries is often something of a black art. It is either educated guessing, trial and error, or using arcane analyzation tools, such as the JetShowPlan flag to output the query execution plan of the database engine.

However, there are a few basic universal rules to optimize database query performance that are generally true for (almost) every query and applicable to all relational database management systems (DBMS) using the SQL query language.

I recorded a video demonstrating all the performance optimizations discussed here. So, you can choose whether you prefer to watch the video or read the text here.

Test Environment

To eliminate side effects such as network latency and bandwidth fluctuations, concurrent server load, and database and operating system caching, I use a copy of the Northwind sample database on my local computer. To make the optimizations have an actual effect, I loaded up the database with a significant number of records (100K Customers, 1.5M Orders). Nevertheless, in this scenario the differences between the variations of the queries are only seconds or even milliseconds. Keep in mind that these differences will be exponentially bigger in a real production environment with multiple users all using the same database and infrastructure at the same time.

All the criteria columns in the following examples are indexed. This is generally recommended for most columns you frequently use in query criteria. If you apply the following recommendations to non-indexed columns the optimizations will have much less or no effect at all.

Text Comparison with LIKE

When searching for partial matches of text we use the LIKE operator in SQL. Often queries with LIKE are written to match any part of the data in the searched column. This prevents the database engine from using an index to find the matches.

If the requirements allow it, convert the query to search from the beginning of the data. Then the index will be used, and query will become significantly faster.

There is no more measurable performance difference to a query testing for full equality.

To illustrate the magnitude of the differences in a real-world scenario I compared the execution times of the above queries with a network backend database (same data volume) and no caching in place.

LIKE '*schmidt*' – Execution time: 30 seconds

LIKE 'schmidt*' – Execution time: 1.6 seconds

Subsequent executions of the same query will then perform much more like the examples with a local database because the data is cached and does not need to be read over the network from disk again.

Avoid Inequality Criteria

Using the inequality operator or inverting an equality check with the NOT keyword also prevent the database engine from using an index in finding the matching values.

If you can rewrite such a query to either use the less/greater than operator or a list of the desired values, it allows index usage and will run much faster. In my sample data the two following queries will produce the exact same results.

Of course, using hardcoded values in a query is often not an ideal solution.

Multiple Tables

Continuing the line of the previous examples, we change the hardcoded Shipper Id values to a query using a Boolean attribute in the Shippers table to indirectly get the Id(s) of the relevant shipper records.

Here is the suboptimal variant using the inequality comparison, written here as NOT IN.

As above, the approach of inverting the criteria to explicitly query for the desired records instead of excluding the non-desired will significantly improve query performance.

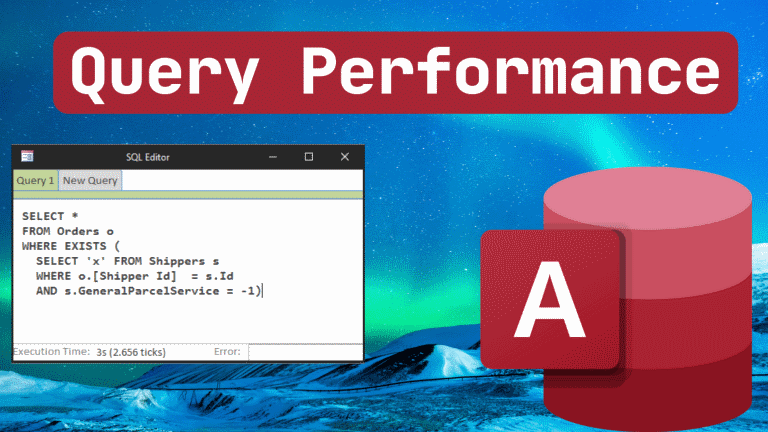

The query can also be written with an EXISTS clause. However, we enter black art terrain here because there is no hard and clear rule when to use which.

A much clearer situation is comparing the sub select statements to a query using a JOIN to get the same results. In almost all cases of Access queries a JOIN will perform much better than a similar query using a sub select.

Custom VBA Functions

It is a common myth that using custom VBA functions in queries will make the query slow. – While this is not entirely false, it is much to broad and if done right they will do no harm to your query’s performance.

Here is a query using a VBA function in the where condition of the query.

The FormattedAddress functions is just doing basic string concatenation to format an address for printing an address label adjusted to the conventions of the destination country.

Using any VBA function to process the data in the table and then comparing the results to the criteria will once again prevent the use of any index in addition to requiring a lot of computing power to process the data from each row in the table.

Using the individual criteria values on the raw table columns will produce much better performance.

Using the function call as an output column does not notably affect performance, because it is only executed for the few rows comprised in the result set.

Built-In Functions

Functions built-into the Access/VBA environment execute quicker because they are written in C++, there also some function built directly into the ACE/JET database engine further streamlining their execution in a query.

However, this does not really change the fundamental problems with function usage in query criteria. Once you use a function on table data, all the data in the table must be processed to evaluate the condition. This is such a huge hit on performance that the slightly more efficient function execution is hardly noticeable.

Here is a query returning all orders in December 2020.

If the query is written using the start and end dates of the date range it will execute much faster.

If you want to generate the date range dynamically, e.g., to always show the order of the current month, you can use functions in the where condition. However, you must only use them on the query criteria values, not on the data in the table.

Optimizing Function Use in Query Criteria

Sometimes it cannot be avoided to use a function on table data. One example is querying with case sensitive criteria. Access (the ACE/JET-Engine) strictly uses case insensitive comparison for text data. If you need to match the exact case of the criterion, you need to use the StrComp function.

Of course, this triggers the performance penalties explained above.

We can mitigate the problem by combining the case sensitive criteria with the very same criteria just using the standard comparison. This will make the engine execute an optimized query on the basic criteria first. Only in a second step the function will be invoked on the remaining records in the intermediate result set and thus have only a very small impact on the performance.

Testing Performance

It is a very common mistake to develop and test application performance only with a small number of sample records. This will always run blazingly fast, but it will not provide any meaningful performance metrics.

Whenever you want to realistically assess the performance of your application you need a realistic volume of data. Ask you client about the amount of data he expects to store in your applications database. Then create sample data matching the expected production data in volume. This might not be feasible for extremely large (SQL Server) databases. Then at least generate at least enough data to make the database really work to process your queries. A query that is optimized to handle 10 million of records will probably also be the best query if it needs to tackle 10 billion of rows.

Conclusion

Query tuning is a complex topic and requires experience to master it. This text and the accompanying video guide you the first steps along the way. If you simply memorize the examples showed here, you can already improve many queries you will encounter in your work. It also creates the foundation to build in-depth knowledge of query performance tuning.

I will never share your email with anyone. You can unsubscribe any time.

This email list is hosted at Mailchimp in the United States. See our privacy policy for further details.

© 1999 - 2024 by Philipp Stiefel - Privacy Policiy