Last Update: 2024 - 01 - 25

Case Study: SQL Stored Procedure vs. .net Class Library for Data Aggregation

The past week I spent quite a lot of time rewriting a MySQL Stored Procedure that did some extensive data transformations for reporting purposes. The rationale behind this re-write and the results are somewhat remarkable, so I’d like to share these thoughts and conclusions with you.

Starting point

I wrote the procedure this is all about some three years ago. It was in production for all this time but its results were rarely used by the users of the application because the input data for it (provided by a third party) was incomplete and hence the results were also.

The procedure would read input data from a table and aggregate that data for reporting purposes. The input data is mainly produced by IoT-Devices and there is a pretty huge volume of it. The transformation is all but straightforward. There is a complex analysis of the time line of the data required. That means much of the data needs to be processed row-by-row in the sequence it was originally recorded. In addition to just aggregate the data for particular time frames, there are lots of backward lookups on the data of previous time ranges required to correctly categorize the data of the current time range.

An important detail of this whole “case study”, you should be aware of, is that all that sequential processing and the lookups on raw or partially aggregated data made this a pretty bad piece of logic to be implemented in a stored procedure. It requires to store intermediate results in temporary tables and to do frequent lookup queries against those temporary tables. That is an approach you usually try to avoid for its bad performance in relational databases.

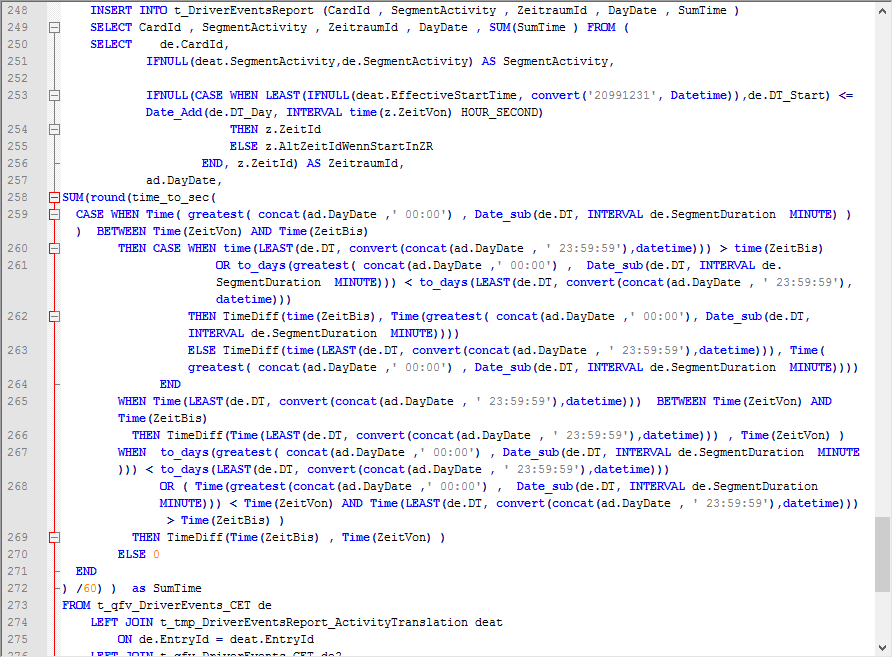

Here is a screenshot of the one of the main SQL Statements of the procedure. I only include it here to give you an idea of its complexity. Don’t try to read and understand the code.

The procedure was running between one and two hours and it was scheduled as an automated job in the middle of the night to not degrade performance of the application during office hours. The “testing process” that was used until now was to compare the results of the procedure with the results that were calculate manually and entered by users. So, for every test the procedure needed to crunch the whole data set for at least a month to procedure comparable results to those of the user’s manual calculations.

A very bad situation, because that made testing against real-world data very difficult and time-consuming. During the last couple of months, there were lots of additions to this whole process required. I always refrained from touching this MySQL Procedure because it was a monster of complexity and it was very time consuming to test its results. I just preprocessed any input data to be compatible with that procedure expected, just because I was horrified to touch the procedure itself.

Then a user reported a bug that was clearly inside the current implementation of the procedure itself…

To Rewrite or not to Rewrite

After that bug report, there was no other option than to touch the procedure to fix the bug.

There was no automatic testing in place to make sure I did not break anything else when fixing the bug. That is a very unpleasant situation for such a complex routine. I had the idea to rewrite the procedure in and object-oriented language C# or VB.net) that would allow for much better automatic and immediate testing via unit tests.

So, this is actually the only clear Pro for a rewrite. A pretty significant one, though.

Let’s look at the Cons now.

First, throwing away significant portions of code that is mainly working just to improve it and to fix some bug is always a gamble.

I know, developers love to (re)write code, because that much more thrill and fun than just poke around in a heap of old garbage, which is obviously not working correctly. But rewriting any code from scratch is almost always a bad idea from an economic point of view.

Why do you think you get it right this time if you failed before? Even if there is reason to believe that because you might know the problem domain much better know, it is still often unreasonable to believe a rewrite is more efficient economically than to just fix the part of the old code that is not working as it should. Software developer legend Joel Spolsky categorizes this a thing you should never do.

Second, I am a firm believer in keeping any data processing routines as close to the data as possible. So, the best option to process and transform data in a MySQL database is with a Stored Procedure right in that database. That eliminates any data transfer of the network. There is no data access boilerplate code required because you access all the data directly with SQL. SQL is usually much more efficient in crunching huge volumes of data than any procedural logic in another language.

The Decision

Particularly the performance considerations made me very worried to move away from the Stored Procedure implementation. The procedure ran more than an hour to process the data already. Of course, there were those aspects to the processing that made this task not ideal for a Stored Procedure. So, I had hope that performance would increase, but still, I was afraid performance might be even worse with a client-side solution in VB or C#.

Still, considering the huge problem with testability, I decided to rewrite the code in VB.net. I estimated I would be able to do it in 8-12 hours.

Of course, I would have been possible to create some automated tests for the existing stored procedure. Yes, that would have significantly improved the situation. But that would have been limited to create input data for the proc, run it and then compare the actual results with the expected ones. That is not too bad, but I would only be possible to test the whole transformation process as one big black box. If the results were incorrect, there would have been no clue as to where exactly the error is.

I started to rewrite…

The Coding

I put the main logic of the code into a VB.net class library, which is hosted inside a very small and basic console application. The application was intended to be run by Windows Task Scheduler during the night.

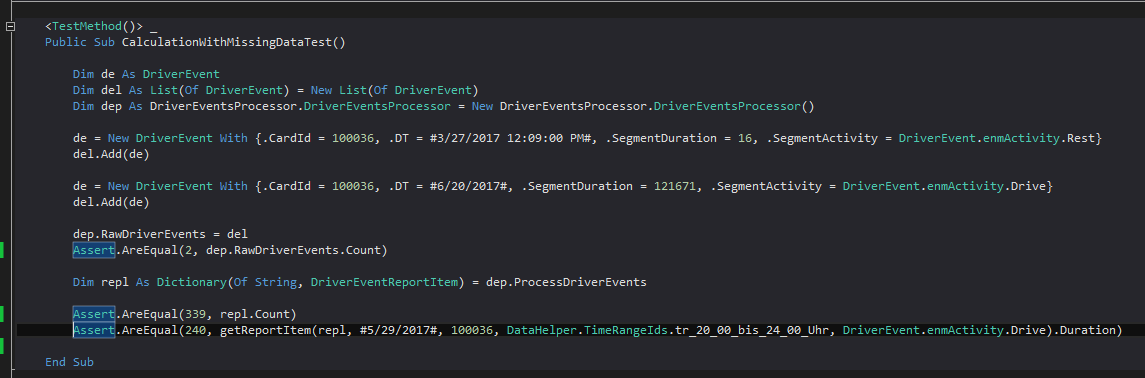

Writing the code was actually quite a bit of fun. The complexity of the data analysis and aggregation was still significant. But it made a huge difference to be able split all the logic in much smaller chunks of VB procedures and functions. For testing, I used the unit test framework included with Visual Studio. I believe it is built on top of the NUnit framework.

I put a huge emphasis on testability and actually wrote most of the code test-first. That means, I wrote a small unit test for the code I intended to write next. Then wrote that actual code until the test passes. Then the next test for the next tiny functionality to be written. Rinse, repeat…

Despite the complexity, I always had the confidence that the code I had written was actually doing what I wanted it to do. Even though I was not able to run the whole transformation logic yet. I was confident to make changes to the code, even significant ones, without the fear of breaking things and introducing new bugs.

Whenever I actually broke things, and I did that quite a lot, any problem could be fixed pretty fast, because the existing test harness caught the error early and it indicated clearly where exactly the problem was.

The Outcome

My assumption about the effort required was fundamentally wrong. Instead of the estimated 8-12 hours, it took me closer to 30 hours to complete the rewrite.

When I first ran the complete data processing routine with live data, I expected it to take at least as long as the replace stored procedure (>1 hr.). So, I started program execution and left my desk to take a break and brew a fresh pot of coffee. – The program finished before I had left the room. - WFT?!

At first, I thought there must have been an error that caused premature termination.

There wasn’t! The whole data set for a month was completely processed in a little more than 30 seconds. I needed a while to accept this fact...

Later I manually verified the results of my new computation routine against the data previous checked by the users of the application. It was spot on.

Conclusion

There are two big conclusions for me here.

The assumption, that it would improve the manageability and maintainability of code significantly to have a unit-test-harness in place, proved to be correct. It made a huge difference during coding and this improvement will even increase if I need to go back and enhance the logic sometime in the future. This test-harness eliminates the fear of breaking something with a small change whose consequences you do not fully oversee.

An automatic test harness for your code is a huge bonus that will increase over the lifetime of the code under test.

The object-oriented design with classes, which themselves contain the data they work on, proved to be much more efficient in regard to performance than I ever expected. While I clearly saw the possibility that the .net code would execute noticeably faster, I never dared to dream of the magnitude of the performance increase.

Complex, non-set-based, processing of data can be implemented with massively better performance in a modern object-oriented language than in a SQL-based procedural language.

Despite these two very positive aspects of the result, you should never ignore the risk of rewriting existing code from scratch. The actual effort to rewrite the code was almost three times as much as I estimated before. This was only one Stored Procedure. If it would have been about a more substantial piece of code an under-estimate of this magnitude could have had disastrous consequences.

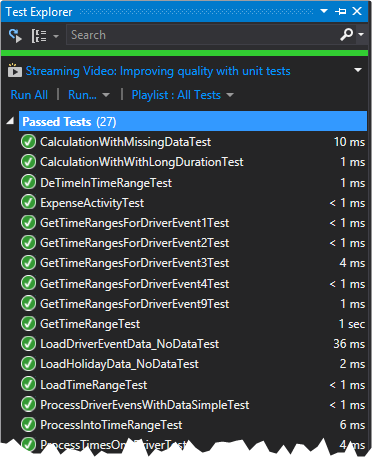

Comparison

I wrap this topic up by quick comparison of the statistics…

|

|

MySQL Stored Procedure |

.net class library |

|

Execution time |

~ 90 Minutes |

~ 30 seconds |

|

Lines of Code * |

~ 350 |

802 |

|

Lines of unit test code |

0 |

513 |

|

Number of unit tests |

0 |

27 |

|

Code coverage |

0 |

82,85% ** |

|

Developer confidence |

★☆☆☆☆ |

★★★★★ |

*= Lines of code excluding unit tests, console application host, and Visual Studio project scaffolding. A small amount of code in the application is only there to support the unit tests and will never be used in production.

**= The code not covered is mostly code writing to the database, which is hard to test and has low risk of error.

I will never share your email with anyone. You can unsubscribe any time.

This email list is hosted at Mailchimp in the United States. See our privacy policy for further details.

© 1999 - 2024 by Philipp Stiefel - Privacy Policiy